A Smooth Representation of SO(3) for Deep Rotation Learning with Uncertainty

arXiv pre-print View it on Github RSS Paper

Best Student Paper Award, RSS 2020

There are many ways to represent rotations: Euler angles, rotation matrices, axis-angle vectors, or unit quaternions, for example. In deep learning, it is common to use unit quaternions for their simple geometric and alebraic structure. However, unit quaternions lack an important smoothness property that makes learning ‘large’ rotations difficult, and other representations are not easily amenable to learning uncertainty. In this work, we address this gap and present a smooth representation that defines a belief (or distribution) over rotations.

A Smooth Representation of SO(3) for Deep Rotation Learning with Uncertainty

Valentin Peretroukhin, Matthew Giamou, David M. Rosen, W. Nicholas Greene, Nicholas Roy, and Jonathan Kelly

Robotics: Science and Systems (RSS) 2020

Code

Available on GithubVideo

Abstract

Accurate rotation estimation is at the heart of robot perception tasks such as visual odometry and object pose estimation. Deep learning has recently provided a new way to perform these tasks, and the choice of rotation representation is an important part of network design.

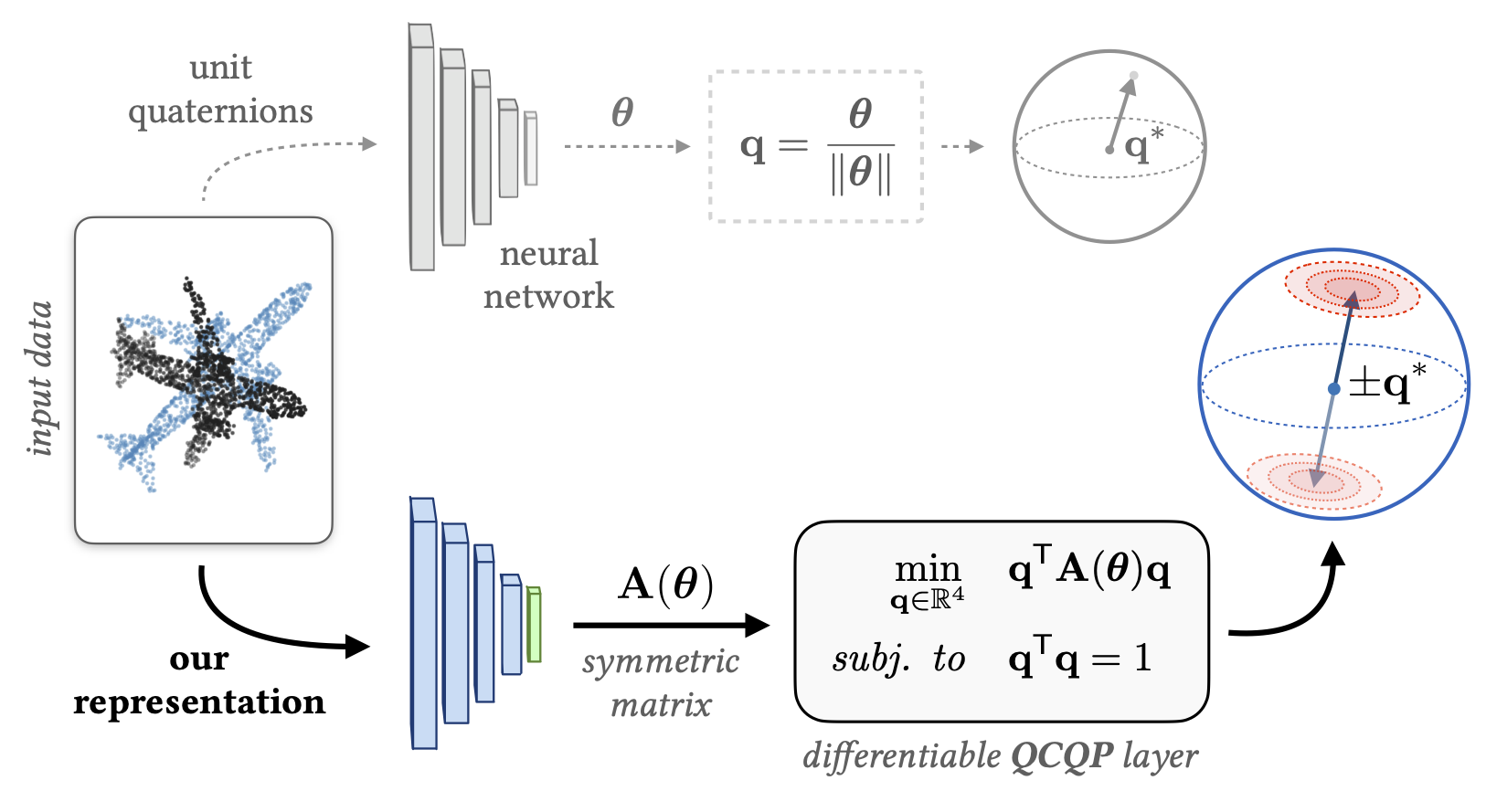

In this work, we present a novel symmetric matrix representation of rotations that is singularity-free and requires marginal computational overhead. We show that our representation has two important properties that make it particularly suitable for learned models: (1) it satisfies a smoothness property that improves convergence and generalization when regressing large rotation targets, and (2) it encodes a symmetric Bingham belief over the space of unit quaternions, permitting the training of uncertainty-aware models.

We empirically validate the benefits of our formulation by training deep neural rotation regressors on two data modalities. First, we use synthetic point-cloud data to show that our representation leads to superior predictive accuracy over existing representations for arbitrary rotation targets. Second, we use vision data collected onboard ground and aerial vehicles to demonstrate that our representation is amenable to an effective out-of-distribution (OOD) rejection technique that significantly improves the robustness of rotation estimates to unseen environmental effects and corrupted input images, without requiring the use of an explicit likelihood loss, stochastic sampling, or an auxiliary classifier. This capability is key for safety-critical applications where detecting novel inputs can prevent catastrophic failure of learned models.

Citation

@inproceedings{2020_Peretroukhin_Smooth,

address = {Corvalis, Oregon, USA},

author = {Valentin Peretroukhin and Matthew Giamou and David Rosen and W. Nicholas Greene and Nicholas Roy and Jonathan Kelly},

booktitle = {Proceedings of Robotics: Science and Systems},

date = {2020-07-12/2020-07-16},

doi = {10.15607/RSS.2020.XVI.007},

month = {Jul. 12--16},

title = {A Smooth Representation of Belief over {SO(3)} for Deep Rotation Learning with Uncertainty},

url = {https://arxiv.org/abs/2006.01031},

year = {2020}

}