Multimodal and Force-Matched Imitation Learning with a See-Through Visuotactile Sensor

arXiv Github DOI Presentation Supplementary Video

Trevor Ablett1, Oliver Limoyo1, Adam Sigal2, Affan Jilani3, Jonathan Kelly1 , Kaleem Siddiqi3, Francois Hogan2, Gregory Dudek2,3

1University of Toronto, 2Samsung AI Centre, Montreal, QC, Canada, 3McGill University

*work completed while Trevor Ablett, Oliver Limoyo, and Affan Jilani were on internship at Samsung AI Centre, Montreal

IEEE Transactions on Robotics (T-RO): Special Section on Tactile Robotics (to appear), to be presented at ICRA 2025

Summary

Table of contents

Motivation

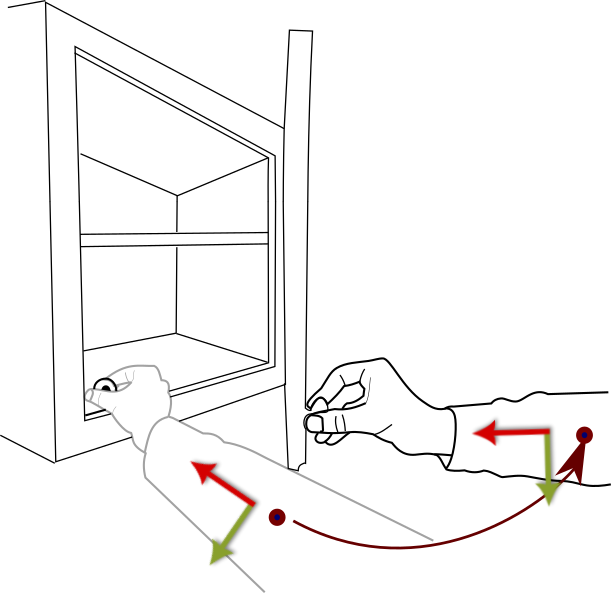

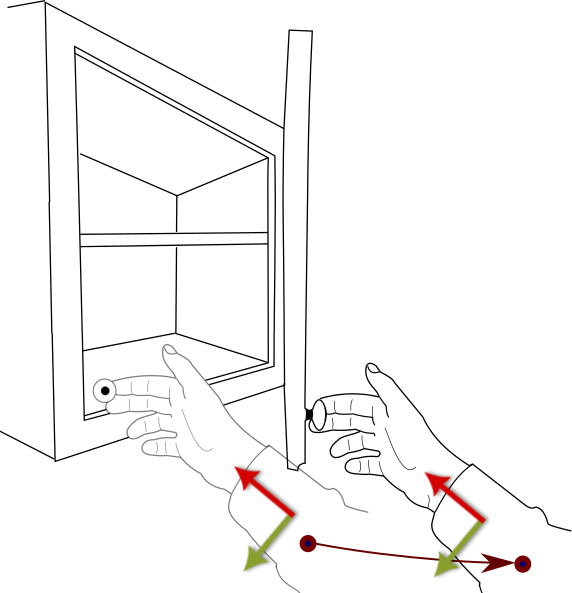

The conventional approach to manipulating articulated objects such as doors and drawers with robots relies on a firm, stable grasp of the handle followed by a large arm motion to complete the opening/closing task.

In contrast, humans are capable of opening and closing doors with minimal arm motions, by relaxing their grasp on the handle and allowing for relative motion between their fingers and the handle. This work aims to learn robot policies for door opening that are more in line with human manipulation, by leveraging high-resolution visual and tactile feedback to control the contact interactions between the robot end-effector and the handle.

Why Kinesthetic Teaching?

Teleoperation is a viable strategy for collecting demonstrations, but it requires a proxy for contact feedback. In the teleoperation example above, we used the vibration motor in the VR controller and the force-torque sensor to provide coarse feedback, but demonstrations were still difficult to collect. Even with considerable practice, we would still often cause the robot to enter into a protective stopped state for generating excessive force against the environment.

Kinesthetic teaching, as shown above, provides contact feedback, alleviating this issue by allowing the operator to naturally react to environmental contact forces.

Kinesthetic teaching has a significant downside, however: the observation and action space during demonstrations does not match that of the robot. This can be partially resolved by using replays, where the robot is commanded to reach the same poses achieved by the human during the demonstration. However, if the human imparts a force on the environment during their demonstration, this replay trajectory can fail.

Approach

See-through-Your-Skin (STS) Tactile Sensor

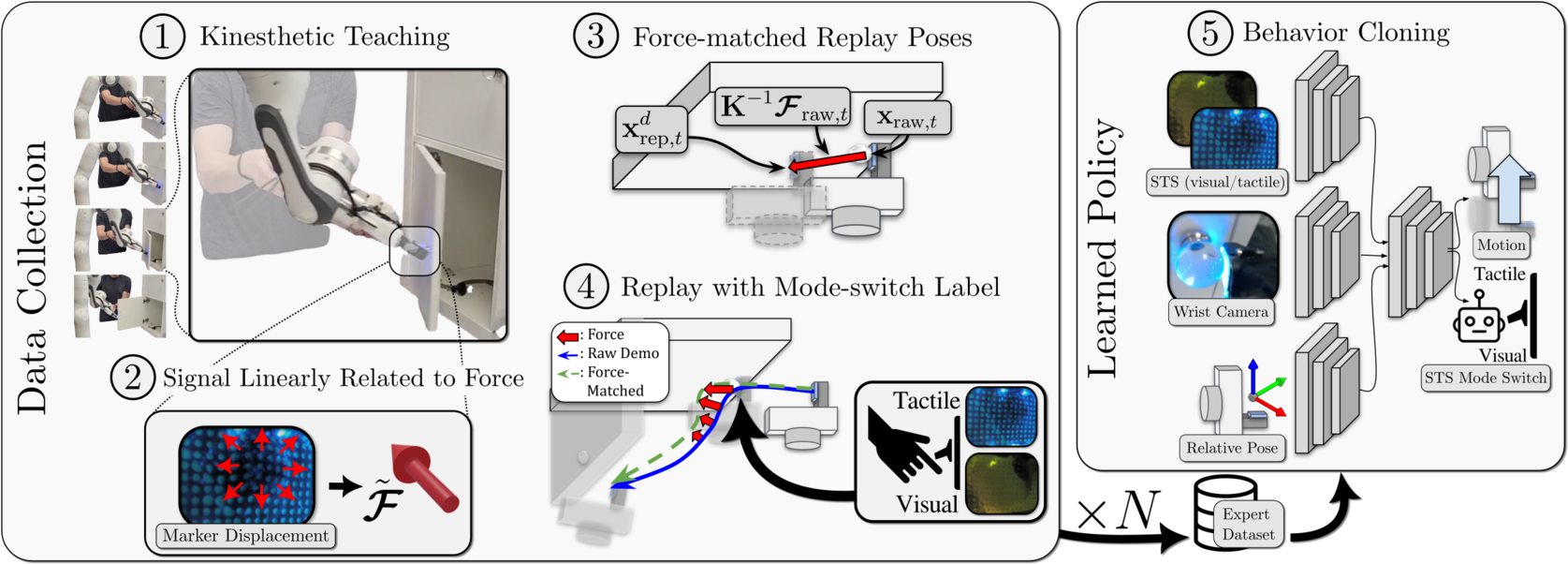

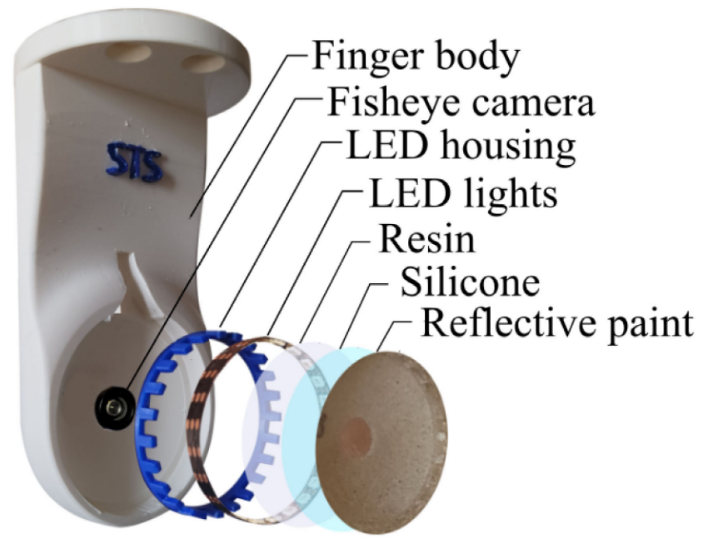

An STS sensor1 is a visuotactile sensor, comparable to other gel-based tactile sensors2, that can be switched between visual and tactile modes by leveraging a semi-transparent surface and controllable lighting, allowing for both pre-contact visual sensing and during-contact tactile sensing with a single sensor. In this work, we use the sensor in tactile mode to record a signal linearly related to force during demonstrations, and show its value in both visual and tactile mode as an input to learned policies through extensive experiments.

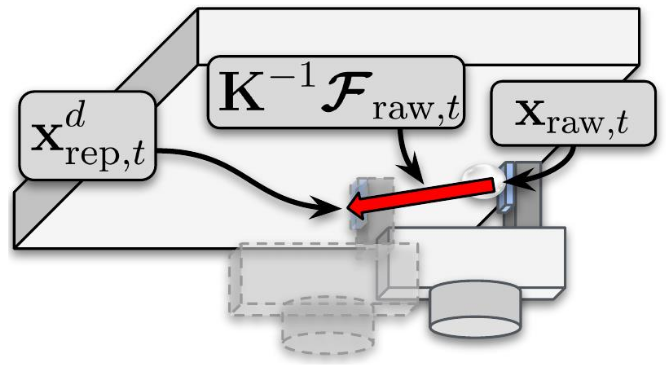

Tactile Force Matching

Our force-matched poses were generated by measuring a signal linearly related to force with an STS sensor, which was then used to modify the desired poses before they were input back into our Cartesian impedance controller. For more details, see our paper.

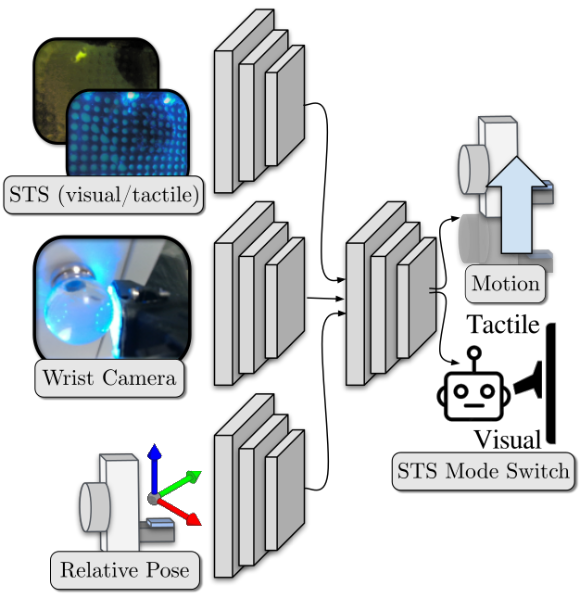

Learned STS Mode Switching

To further leverage an STS sensor for imitation learning, we add mode switching as a policy output, allowing the policy to learn the appropriate moment to switch an STS from its visual to its tactile mode.

Experiments

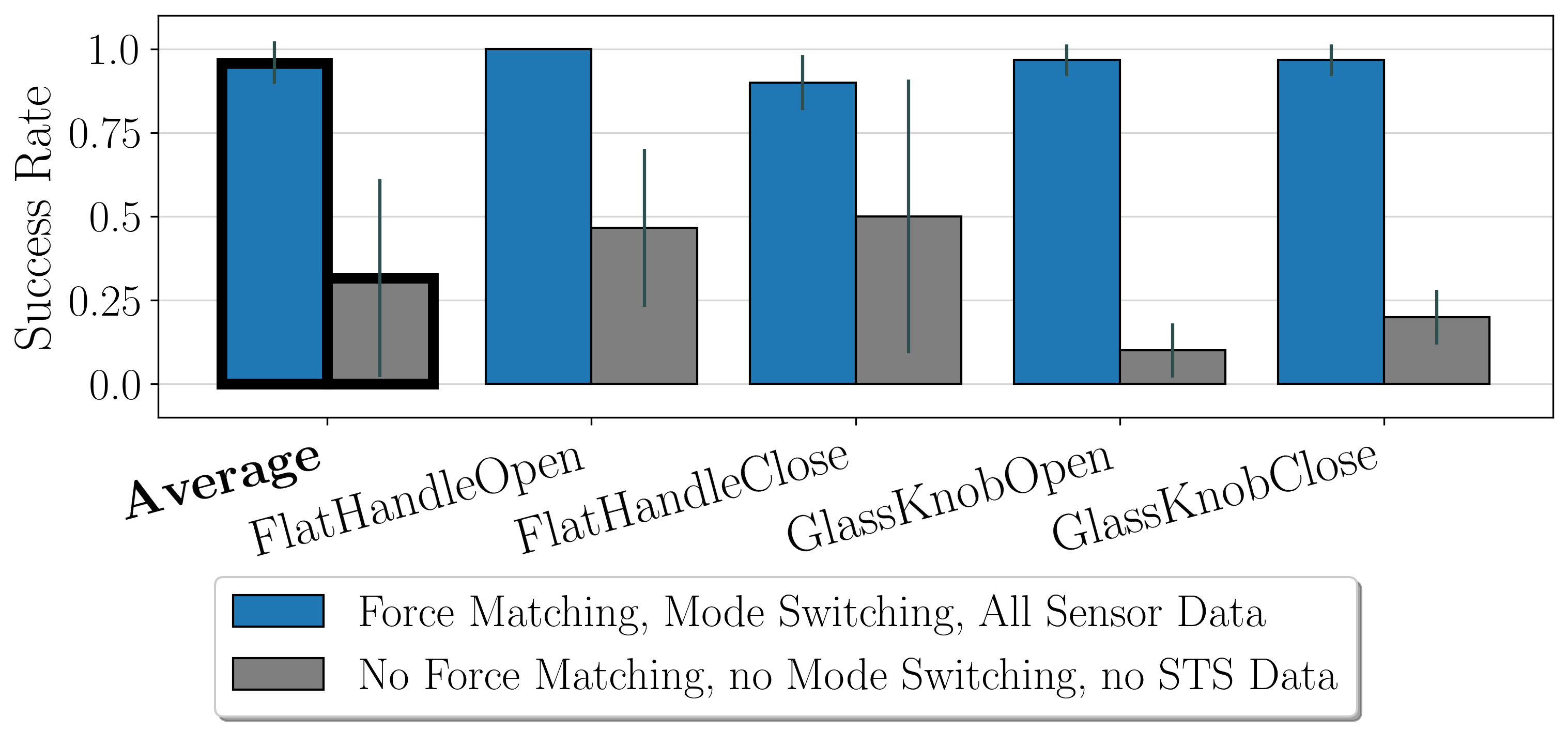

To verify the efficacy of force matching, learned mode switching, and tactile sensing in general, we study multiple observation configurations to compare and contrast the value of visual and tactile data from an STS with visual data from a wrist-mounted eye-in-hand camera.

Experimental Tasks

In this work, we study four challenging robotic tasks: door opening and closing with a flat handle a glass knob, including a randomized initial position requiring a reach to initially make contact. This scenario is meant to to simulate a scenario with a true mobile manipulator, where the initial pose between the arm and the handle/knob would not be consistent between each open/close. The closing tasks required shutting the door “quietly” without allowing it to slam.

Training details

We collected 20 raw demonstrations for each task, and then completed replays of each demonstration under different conditions, such as including/excluding force matching and/or mode switching.

As shown in the Learned STS Mode Switching section, we trained policies with STS images, wrist camera images, and relative robot pose (i.e., relative to the beginning of an episode) as inputs, with delta end-effector poses and mode switch control as outputs.

For all experiments, we trained 3 seeds of each policy, and then ran each policy for 10 episodes in each configuration.

Results – Overall

The videos below show learned policies with force matching, mode switching, and STS data included.

Conclusion

Through over 3,000 test episodes from real-world manipulation experiments, we find that the inclusion of force matching raises average policy success rates by 62.5%, STS mode switching by 30.3%, and STS data as a policy input by 42.5%. Our results highlight the utility of see-through tactile sensing for IL, both for data collection to allow force matching, and for policy execution to allow accurate task feedback.

Code

Available on GithubCitation

@misc{ablettMultimodalForceMatchedImitation2023,

title = {Multimodal and Force-Matched Imitation Learning with a See-Through Visuotactile Sensor},

author = {Ablett, Trevor and Limoyo, Oliver and Sigal, Adam and Jilani, Affan and Kelly, Jonathan and Siddiqi, Kaleem and Hogan, Francois and Dudek, Gregory},

year = {2023},

month = dec,

number = {arXiv:2311.01248},

eprint = {2311.01248},

primaryclass = {cs},

publisher = ,

doi = {10.48550/arXiv.2311.01248},

urldate = {2024-02-15},

archiveprefix = {arxiv},

keywords = {Computer Science - Artificial Intelligence,Computer Science - Machine Learning,Computer Science - Robotics}

}

Bibliography

-

F. R. Hogan, J.-F. Tremblay, B. H. Baghi, M. Jenkin, K. Siddiqi, and G. Dudek, “Finger-STS: Combined Proximity and Tactile Sensing for Robotic Manipulation,” IEEE Robotics and Automation Letters, vol. 7, no. 4, pp. 10865–10872, Oct. 2022, doi: 10.1109/LRA.2022.3191812. ↩ ↩2

-

C. Chi, X. Sun, N. Xue, T. Li, and C. Liu, “Recent Progress in Technologies for Tactile Sensors,” Sensors, vol. 18, no. 4, Art. no. 4, Apr. 2018, doi: 10.3390/s18040948. ↩