VibES: Induced Vibration for Persistent Event-Based Sensing

(3DV 2026)

Vincenzo Polizzi1, Stephen Yang1, Quentin Clark2, Jonathan Kelly1, Igor Gilitschenski2, David B. Lindell2

1University of Toronto, Robotics Institute | 2University of Toronto, Department of Computer Science

Abstract

Event cameras are a bio-inspired class of sensors that asynchronously measure per-pixel intensity changes. Under fixed illumination conditions in static or low-motion scenes, rigidly mounted event cameras are unable to generate any events, becoming unsuitable for most computer vision tasks. To address this limitation, recent work has investigated motion-induced event stimulation that often requires complex hardware or additional optical components. In contrast, we introduce a lightweight approach to sustain persistent event generation by employing a simple rotating unbalanced mass to induce periodic vibrational motion. This is combined with a motion-compensation pipeline that removes the injected motion and yields clean, motion-corrected events for downstream perception tasks. We demonstrate our approach with a hardware prototype and evaluate it on real-world captured datasets. Our method reliably recovers motion parameters and improves both image reconstruction and edge detection over event-based sensing without motion induction.

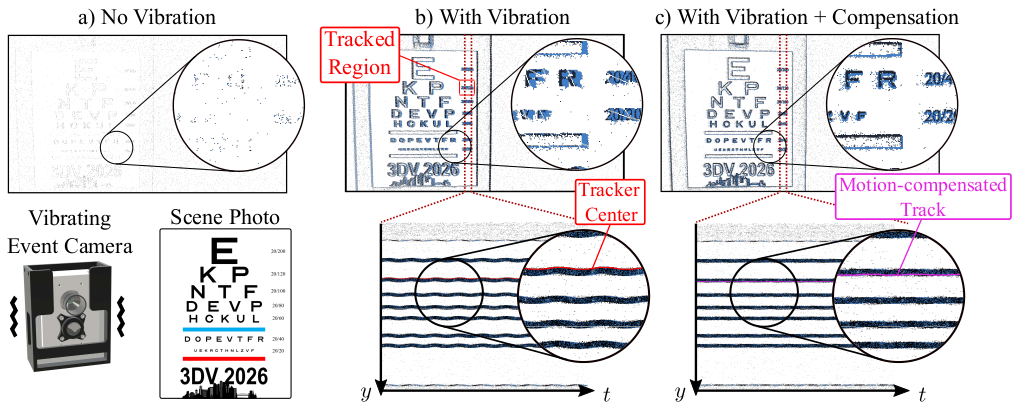

Interactive Compensation Visualization

Left: Uncompensated | Right: Motion-Compensated

Key Contributions

🔧 Vibrating Event Camera

We design a vibrating event camera using a simple rotating unbalanced mass that enables event generation in static scenes, addressing a fundamental limitation of event cameras.

⚡ Real-Time Compensation

We introduce a real-time motion-compensation pipeline that estimates and removes induced vibrations online, without requiring calibration or prior knowledge of physical parameters.

Method Overview

Our approach combines mechanical vibration with computational motion compensation to enable persistent event generation:

- Mechanical Vibration: A DC motor with an eccentric mass induces controlled sinusoidal motion through a mass-spring-damper system

- Event Tracking: HASTE tracker extracts centroid trajectories from the event stream

- Frequency Estimation: Non-uniform FFT (NUFFT) determines the oscillation frequency

- Extended Kalman Filter: Continuously tracks motion parameters (amplitude, frequency, phase)

- Motion Compensation: Removes induced vibration to yield clean, motion-corrected events

Figure: VibES processing pipeline from vibration induction to motion-compensated events

Qualitative Results

Comparison showing (a) No Vibration: blurred accumulated events, (b) With Vibration: increased event density, (c) With Compensation: sharp, motion-corrected results

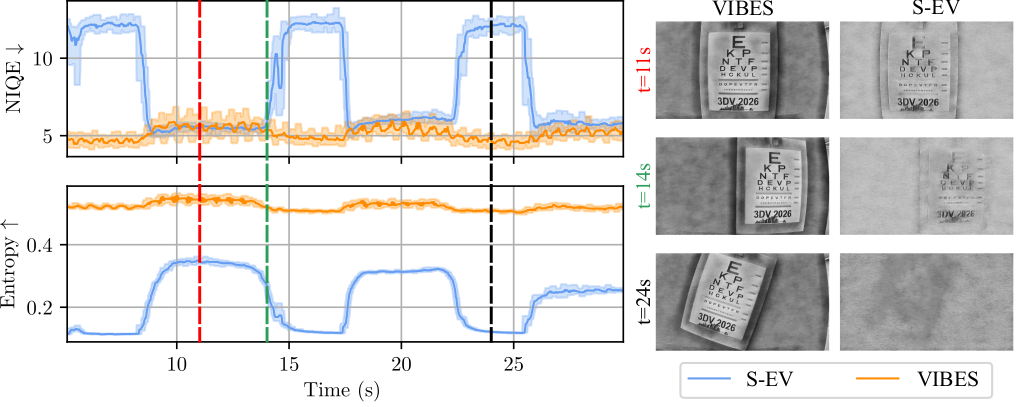

Performance Across Datasets

| Dataset | Metric | S-EV | VIBES | Improvement |

|---|---|---|---|---|

| Logo | Entropy ↑ | 0.21±0.09 | 0.52±0.01 | +148% |

| Logo | NIQE ↓ | 8.6±9.4 | 5.1±0.3 | -41% |

| Pattern Checkerboard | Variance ↑ | 0.16±0.11 | 3.16±0.33 | +1875% |

| AMI-EV | Entropy ↑ | 0.08±0.05 | 0.24±0.04 | +200% |

| Pattern | Gradient Mag. ↑ | 0.19±0.09 | 0.30±0.06 | +58% |

Hardware Prototype

Physical prototype showing the event camera with vibration mechanism

System Specifications

Applications

📸 Image Reconstruction

Generate high-quality images from events even in static scenes. E2VID reconstruction quality improved with NIQE scores 41% better for Logo scene.

🔍 Edge Detection

Extract clean, continuous edges with reduced fragmentation. Contour length increased 87% and junction count improved dramatically.

📊 Frequency Estimation

Accurately estimate vibration frequencies of objects in the scene. Tested 7.5-22.5 Hz range with <0.1 Hz error.

📏 Relative Depth Awareness

Parallax from induced motion encodes depth information. Successfully predicted relative depth ratios (0.33, 0.50, 0.66) in synthetic scenes.

Power Efficiency Comparison

| Method | Motor Type | Power Consumption | Notes |

|---|---|---|---|

| VibES (Ours) | DC Hobby Motor | 0.282W | Full power |

| Orchard et al. | Dynamixel MX-28T | 2.4W | Standby |

| Testa et al. (2020) | PTU-D46-17 | 6W - 13W | Low to full power |

| Testa et al. (2023) | PTU-E46 | 6W - 13W | Low to full power |

| AMI-EV | DJI M2006 BLDC | 14.4W | No-load |

Edge Quality Comparison

| Scene | Status | Avg. Components ↓ | Avg. Length ↑ | Junction Count |

|---|---|---|---|---|

| Logo | S-EV | 265.8±221.4 | 3.6±3.1 | 136.5±165.8 |

| VIBES | 175.0±52.5 | 32.1±10.8 | 979.3±349.2 | |

| Pattern Checkerboard | S-EV | 300.3±232.9 | 6.7±5.3 | 193.2±176.3 |

| VIBES | 140.7±40.2 | 49.9±17.3 | 691.3±63.2 | |

| AMI-EV | S-EV | 91.1±17.2 | 4.2±2.4 | 63.3±54.6 |

| VIBES | 82.9±14.1 | 8.3±1.6 | 147.9±31.8 |

Dataset Download

The dataset used to generate the results in the paper can be downloaded from the Hugging Face dataset hub.

Cite this work

@inproceedings{polizzi_2026_3DV,

title={VibES: Induced Vibration for Persistent Event-Based Sensing},

author={Polizzi, Vincenzo and Yang, Stephen and Clark, Quentin and

Kelly, Jonathan and Gilitschenski, Igor and Lindell, David B.},

booktitle={International Conference on 3D Vision (3DV)},

year={2026}

}